Thu Jun 1, 2017

Concept

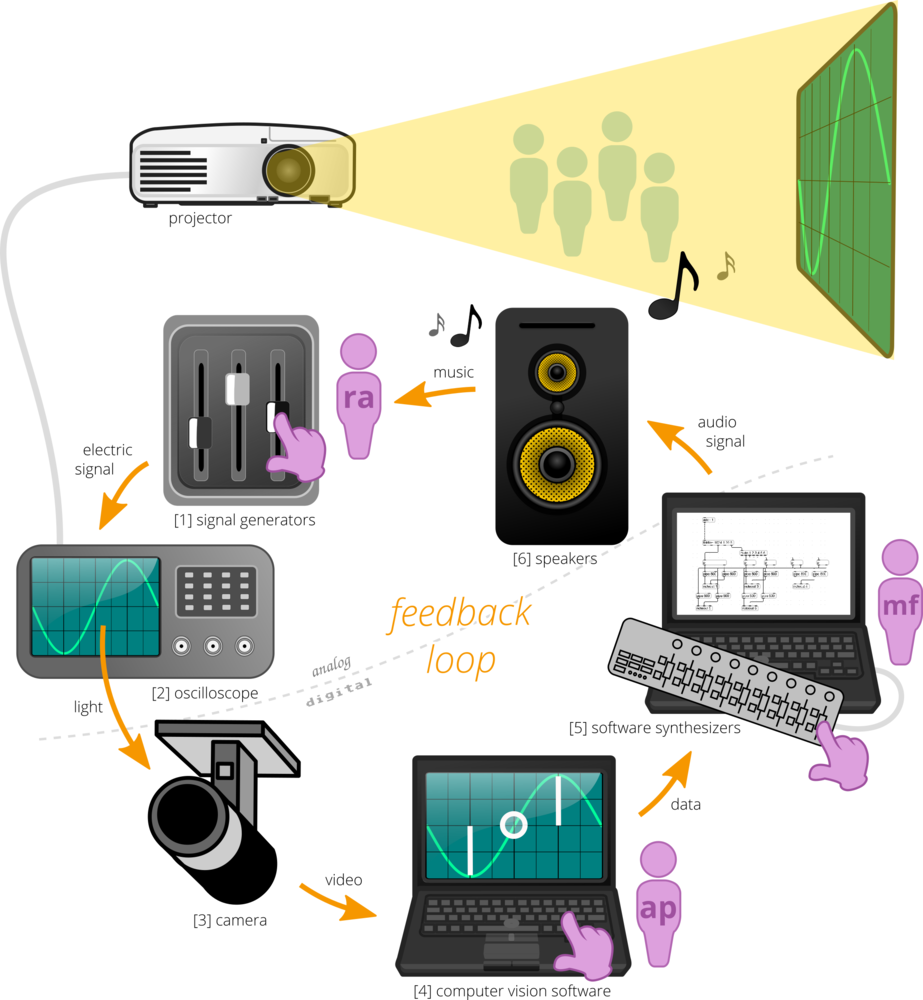

Kū, the Void is a synesthetic, live-generated audiovisual experience that combines hacked scientific technology and custom-developed software to expressively explore the ephemeral/immaterial. Rich, evolving oscilloscope visuals (Ran Ancor) are synchronized via computer vision (Abe Pazos) with a lush, responsively synthesized soundscape (Mei-Fang Liau). In this hybrid system, sensitively fluctuating analog signals, fine-tuned data analysis, and human intuition perform together as one coherent living being.

空 Kū

Kū is a Japanese word, most often translated as “Void”, but also meaning “Sky” or “Heaven”. Kū, as we attempt to manifest in this work, represents those things beyond our everyday experience, particularly those things composed of pure energy. Kū represents spirit, thought, and creative energy, as well as our ability to think and to communicate. Kū is not the void in the sense of nothingness, but is a totality, a universal freedom, beyond the normal conditions of existence.

Light, Sound, Synesthesia

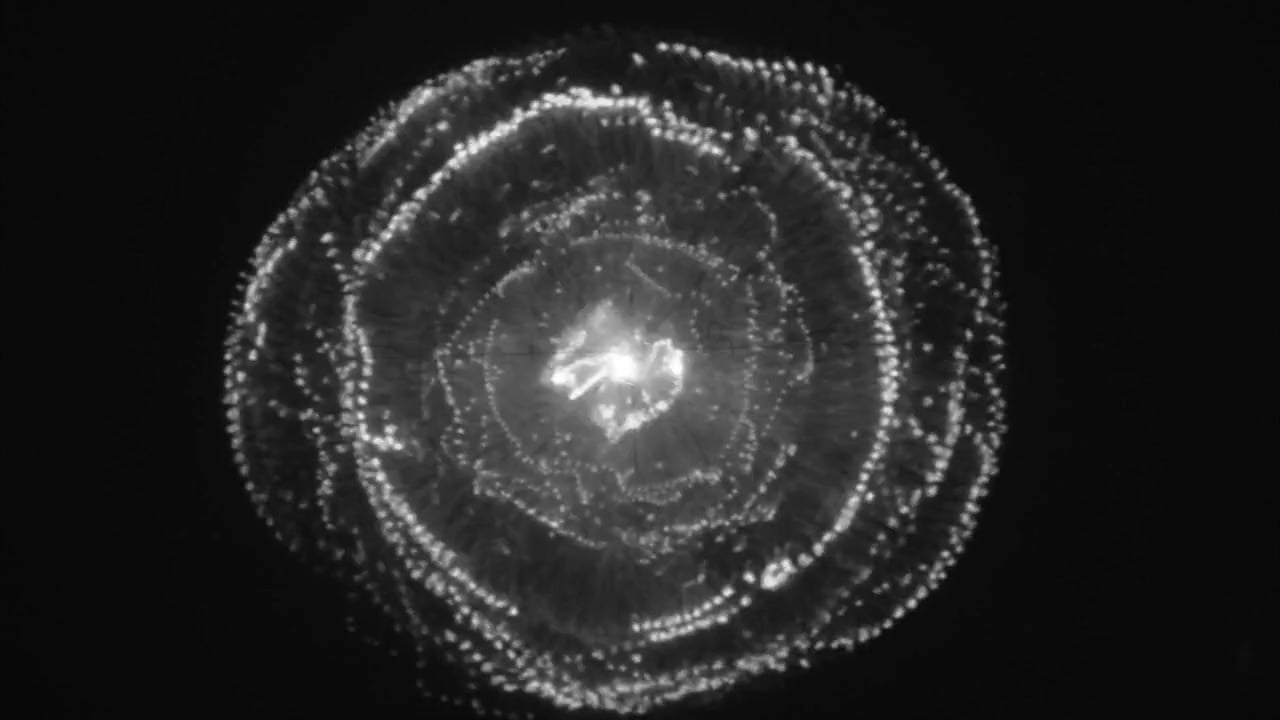

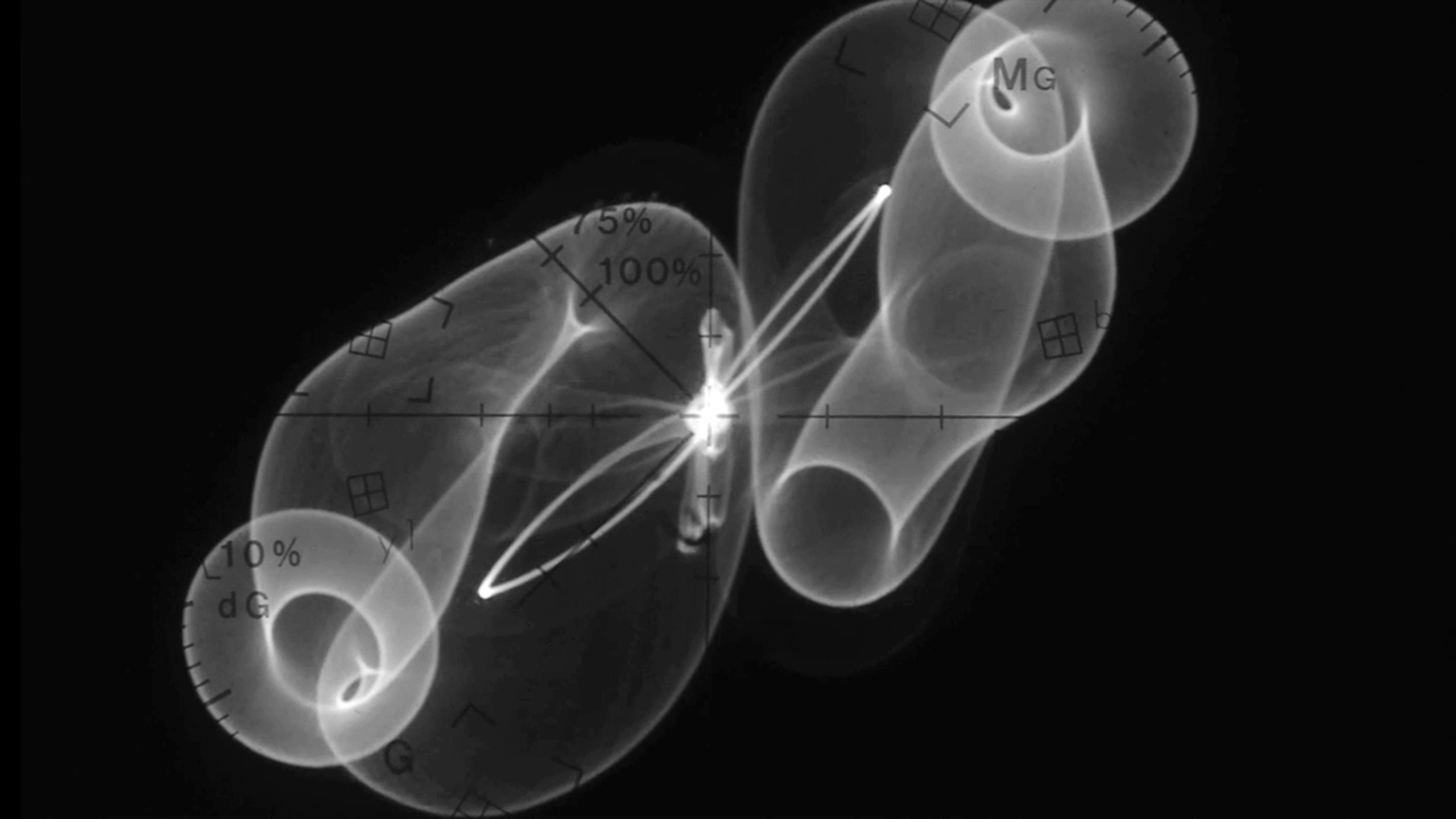

Humans usually see light indirectly as it is reflected by illuminated objects in our environments. In certain cases however, light can be perceived as a direct concrete phenomenon: when staring at fire, incandescent light bulbs or stars in the sky. Pure light is Ran Ancor’s ongoing visual study, and he uses analog hardware such as oscilloscopes to transform signals into abstract evolving shapes of light. The screen of an oscilloscope allows us to see direct light without reference objects, while still enabling the artist to manipulate and explore the light source and it’s produced images. A certain degree of unpredictability, noise and the lack of quantization in analog systems produce seemingly organic aesthetics and behaviors. This creates an unusual situation for our perceptions, which continuously try to recognize familiarity within the abstract but organic form.

Synesthesia is a perceptual phenomenon in which stimulation of one sensory or cognitive pathway leads to automatic experiences in a second sensory or cognitive pathway. The concept of synesthesia in the arts is regarded as the simultaneous perception of multiple stimuli in one gestalt experience, often in genres like visual music and music visualization. We believe our approach to visual sonification can be included in the same category.

To achieve the synesthetic link of visual and audio perceptions Abe Pazos and Mei-Fang Liau (the duo known as Floating Spectrum) develop in close collaboration custom software that combines computer vision analysis with complex generated musical output.

This software observes in real time the images produced by an oscilloscope, constructing a data-to-sound pipeline that is quickly responsive to visuals allowing Mei-Fang Liau’s musical performance to occur based upon but also surpassing her own real-time human perceptions.

Technique & Process

Audiovisual feedback loop

From oscilloscopes to animated light

The oscilloscope is an electronic test instrument that enables the observation of varying signal voltages. The change of this signal over time describes shapes which are continuously graphed against a calibrated scale. It generates light from electromagnetic fields deflecting electron beams. It is like drawing on the sand, evanescent images that are soon washed away by incoming waves.

To produce visuals using such a system, the first step is to generate and modulate signals. A number of hardware-controlled signal processing devices produce and shape signals which are delivered to the oscilloscope, which functions as the equivalent of the speaker in audio systems.

A large part of the creative process consists of envisioning and building the initial paths that the signal must pass through, modifying its own structure. A series of oscillators, filters, amplifiers, envelope generators, etc. are used for that purpose. In this modular system, minor variations in input often produce substantial variation/fluctuation in the final composition, encapsulating the butterfly effect of chaos theory. After traveling through the series of influential factors, the signal finally reaches the last stage of the electrical signal modulation-process: the oscilloscope and image production.

Computer Vision and Data Sonification

Computer vision is an interdisciplinary field that deals with how computers can be made for gaining high-level understanding from digital images or videos. It seeks to automate tasks that the human visual system can do.

The program created by Abe Pazos uses feature extraction to identify abstract visual features often recognizable to human vision (such as brightness, smoothness, noisiness, symmetry, and shape). It also tracks the changing visual elements through time, trying to identify the sort of motion events that humans would notice and respond to. The static and moving visual data is combined and transferred with low latency to Liau’s music generation program in a live performance environment.

The sound creation process involves observing 10 real-time visual data streams, generated by the computer vision algorithms. These data streams are used as the starting point for sound system design. Mei-Fang Liau observes the visual representation of the data streams, and the hidden relationships (which are not always obvious by looking at the visual) in these data streams. She then explores how they can be best translated into musical elements. These elements need to work in harmony, and be the intuitive counterparts of the visual side. As the last stage, the song structure is created in real time by the computer together with the musician, through the flow of data that goes through the generative sound system.

Links

Past Performances

- Radical db. Winner of the international experimental music contest. Zaragoza, Spain, Nov 7 - 10, 2018

- Transmediale Vorspiel 2017. Spektrum Berlin. Feb 12th, 2017

Video Exhibitions

- Punto y Raya. Finalist at the 8th edition of the Punto y Raya abstract film festival. Wrocław, Poland, Oct 25 - 28, 2018

- GENERATE!° - Festival für elektronische Künste. Tübingen, Germany, Oct 20 - Nov 11, 2017

- MADATAC. Madrid, Spain, Jan 10 - 30, 2018