Tue Oct 1, 2019

Find the full album at https://floatingspectrum.bandcamp.com/

The making of a music video

In 5 weeks the music video for the track Inner Island will be launched. The last time I did a 3D animation was for a competition with Amiga computers about 20 years ago (2nd prize!). Fortunately I have experience in computer graphics, editing video tutorials, 2D and 3D animation, GPU shaders, photography and generative art. Maybe I can figure something out.

My first idea is to write a software to generate the video clip in real time.

I start writing an openFrameworks program but I quickly realize that I would spend most of my time figuring out technical issues instead of focusing in story and aesthetics.

My attempt to create my own software doesn’t get very far

That leaves some other options: putting together a video clip with abstract footage or animate the whole piece in 3D. In both cases there should be camera cuts. I have seen clips with just one or few long takes, but I’m not convinced. I need to figure out what length those cuts should be. I study music video clips online and then try myself with the Kdenlive video editor by making a collage of light reflection and refraction clips cut in sync with the music.

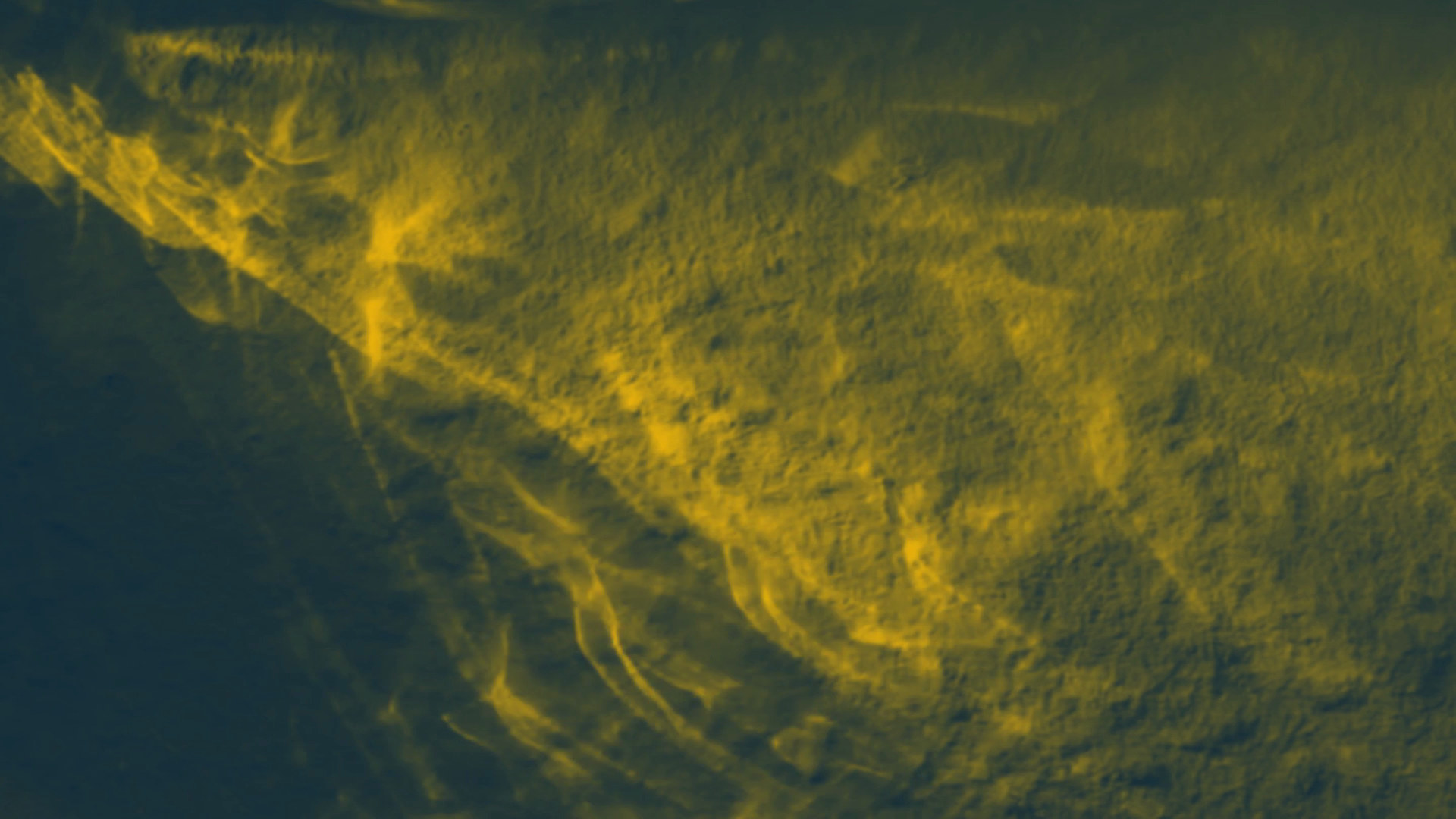

A frame from a test video based on abstract footage

Two things result from that experiment: what might work in terms of cut lengths and the realization that editing a good piece out of abstract clips would not work with the editing tools I have. So it’s decided: I’ll produce the piece using Blender.

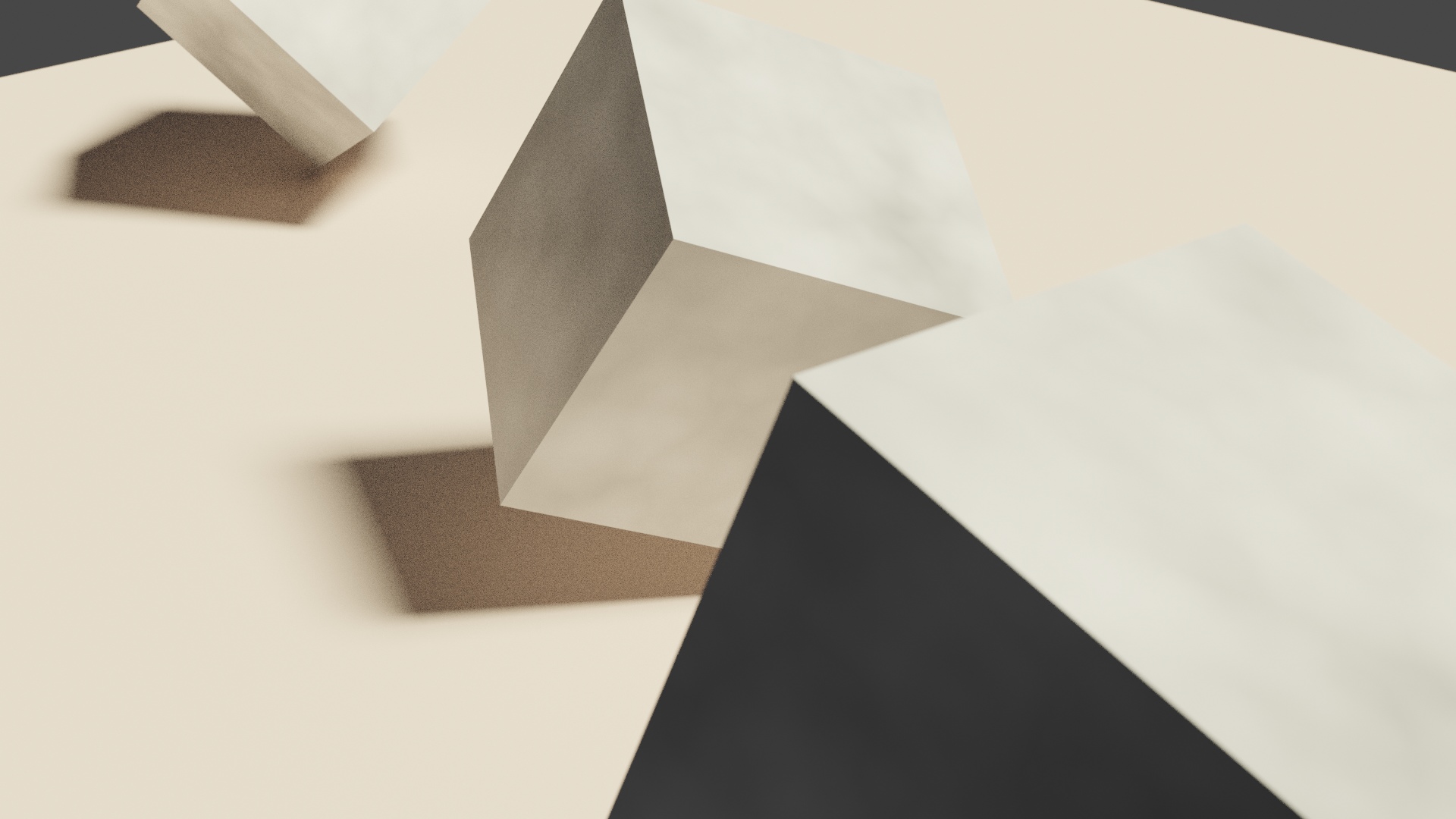

I watch all the Blender tutorials I find about topics I might need and animate my first cubes with depth of field.

Frame form an early test using Blender

Not impressive, but it moves :-) Animating in Blender seems not so hard. I can create key frames for any property in my 3D world (positions, rotations, colors…) and the software interpolates those values smoothly over time. Very good.

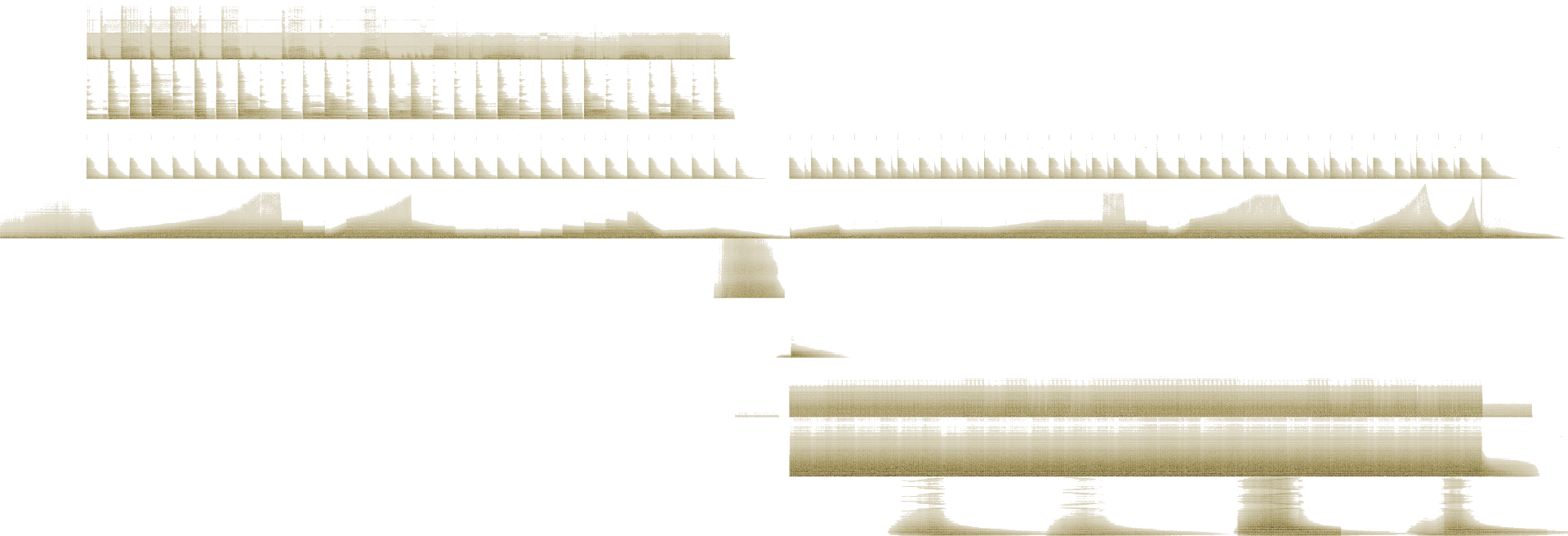

I analyze the 9 audio channels from the song in case that gives me ideas. I see rhythm and the fact that the song has obviously two parts. I create a spreadsheet with a list of frames in which things should definitely happen in reaction to percussive sounds.

FFT analysis of the Inner Island song by Floating Spectrum

I watch existing music videos like 1 2 3 4 and 5 for inspiration. Some feel aesthetically impressive but I often miss a story. The shapes of stories is in my mind. I don’t need necessarily a clear story, but a sense of starting somewhere and ending somewhere else.

Photogrammetry

In the evenings we visit beautiful rocky landscapes and go for a swim. Since Blender might not be a hard enough challenge by itself (what!?), lets get into photogrammetry at the same time! Every evening I capture dozens of photos of rocky landscapes, then let the computer work at night converting them into 3D meshes.

Lluc Alcari, one of the backgrounds featured in the video clip

Those landscapes will be the stage of my movie. I learn how to use Meshroom to convert photos into 3D models and Meshlab to simplify and clean them up afterwards.

A 3D model shown in Meshlab

Creating models of the backgrounds works quite well. I choose times and locations without direct sun light to avoid strong shadows in the textures. That way I can later place a virtual sun where it works best for the scene. In total we scan 36 environments. I enjoy the process.

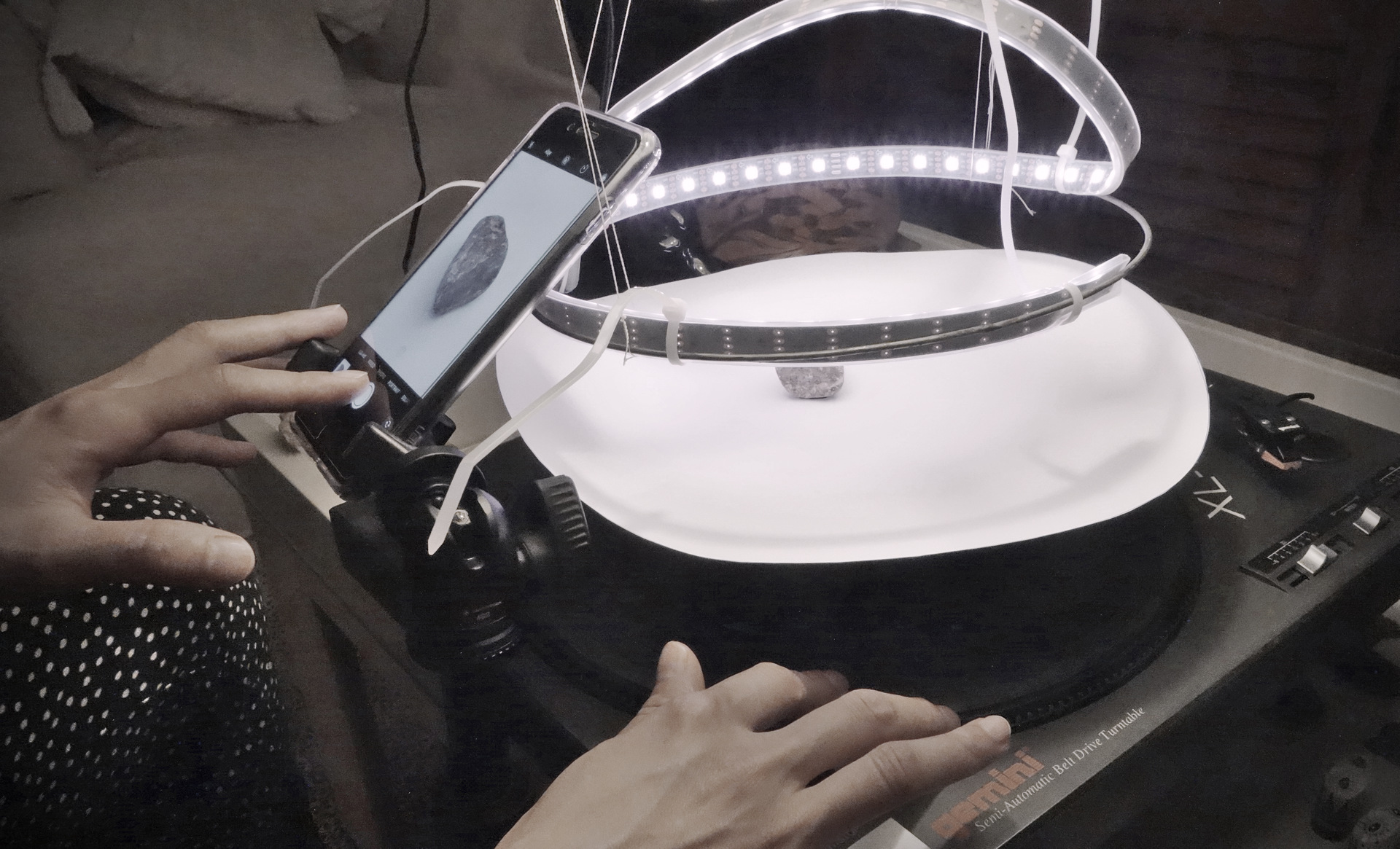

On the other hand, we discover that scanning stones under 10 cm (the little actors in my film) is very challenging. We first use a very much improvised turntable and a round piece of paper with marks at regular intervals to photograph rocks from all needed angles. I adjust color and intensity in this LED strip I took with me on “vacation” to obtain photographs with natural looking colors.

Using a turntable to scan small rocks

I later transform the LED strip into an cylindrical light mount I can attach to the camera for obtaining even more uniform lighting.

LED strip mobile phone attachment for uniform lighting

Unfortunately lighting does not seem to be the problem. Instead, photographs shot at a close distance have a strong depth of field effect (blurred areas) that makes it impossible to match points across photos. There are solutions but none we can implement in such a short time so after a few succesful scans and several failed ones I decide to buy the remaining stones from an online platform. It is sad because I carefuly picked the right stones for the movie, and it is also a challenge because most of the stones I find online don’t have the right look I’m after.

The story

For years I’ve been interested in knowing more about story telling, but being interested is not closely related to being able to do it. Five minutes and ten seconds is a very long time: I’m 7522 rendered frames away from my goal. What kind of story to tell? This is what I come up with:

There are stones resting in different rocky landscapes. One by one the stones take off and fly to a place where they all meet. They are up to something, they have a goal. The stones stage a choreography trying to unlock a transformation of the world. They know organic worlds exist. It is their promised land, and they want to summon it.

The stones attempt the right sequence of moves but something goes wrong. Maybe they were not ready for it? Instead of greener landscapes they teleport to flat and gray urban sidewalks and streets. After the initial shock some of the rocks begin to try undo the mess. Apparently there is some kind of response, which convinces more rocks to collaborate instead of just observe. The effort seems to pay off, and the burned out spiritual leader slowly levitates getting closer and closer to their destination.

Unfortunately the effort is way too great and all participants crash down exhausted in a new world. Not better, not organic, but just different.

It is the story of a creative mind rewriting the same page over and over, trying to come up with a good story.

A story about a story.

The missing Blender tutorial

The idea and the deadline are both there. But there is an important piece of information missing. I find nothing about how to make a movie in Blender. The tutorials show how to create a donut, how to make something explode into little pieces, how to create beautiful shadows, fluid simulations and endless other details. But no big picture information: how do I go from nothing to a full movie? should I use multiple scenes or is one enough? how do I manage my assets? how do I organize the textures and layers? How to become a succesful movie director in 48 hours? I guess I’ll have to figure out myself.

The roadmap

I need to plan ahead to make sure I don’t miss the deadline. I start at the end, of course: render time, which is directly proportional to the amount of frames to produce. I do these quick calculations:

duration: 5:10 = 310 seconds x 24 FPS (30 FPS) = 7440 (9300) frames

at 10 min. per frame = 51 (65) days render time. NOT DOABLE.

at 1 min. per frame = 5 (7) days render time. MAYBE.

Conclusion: I need to render each frame in less than one minute, and I should go for 24 frames per second to save 20% rendering time compared to 30 FPS.

Fortunately a new version of Blender has been released and it has a new rendering engine called Eevee. This turns out to be essential during editing because it allows me to preview most scenes in real time. It is truly remarkable to be able to move in remote familiar landscapes in real time as if I was currently there.

Eevee will be equally key during the final render: it should be doable in a few hours at full HD resolution. The mission seems not completely impossible, but will Blender be able to handle it? Will I?

I schedule the following 5 weeks as follows: in each of the next 3 weeks I need to produce 103.33 seconds of animation (2480 frames). The remaining 2 weeks will be for polishing and rendering. Let’s go.

Rocks being rocks

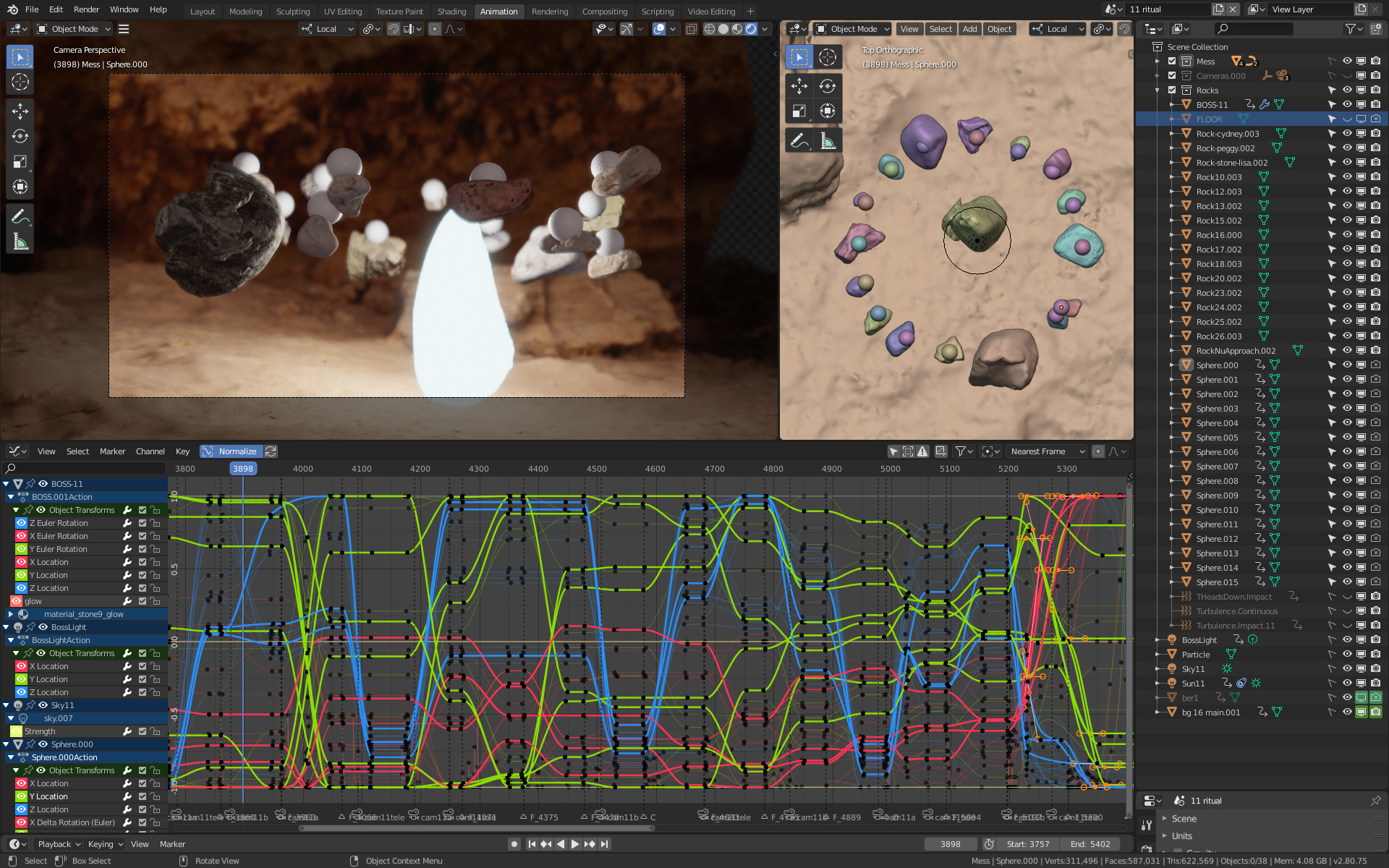

The way I imagine this movie includes rocks moving through the air sometimes colliding with each other but in 3D animation software objects can normally go through each other. There are tutorials explaining how to drop objects in free fall and make them collide or showing how to throw an object towards resting objects and simulate all the collisions. But what about rocks with intention? How can I direct multiple objects while simulating realistic collisions? That’s an essential part of my plan.

invisible spheres set the target rock locations

I ask online How to animate object target positions while respecting rigid body collisions? and one answer helps me figure out a solution. I can have each rock hanging from an invisible thread that is held by an invisible hand (a sphere, actually). When I move the hand the rock follows. Close but not quite because I want rocks with free will, not marionette-rocks. What works is to have the invisible hand and the rock in the exact same location, so the invisible thread has zero-length. This allows rocks to follow the corresponding hands, gives them space to move apart when collisions happen but without the marionette behavior.

Organizing the project in scenes

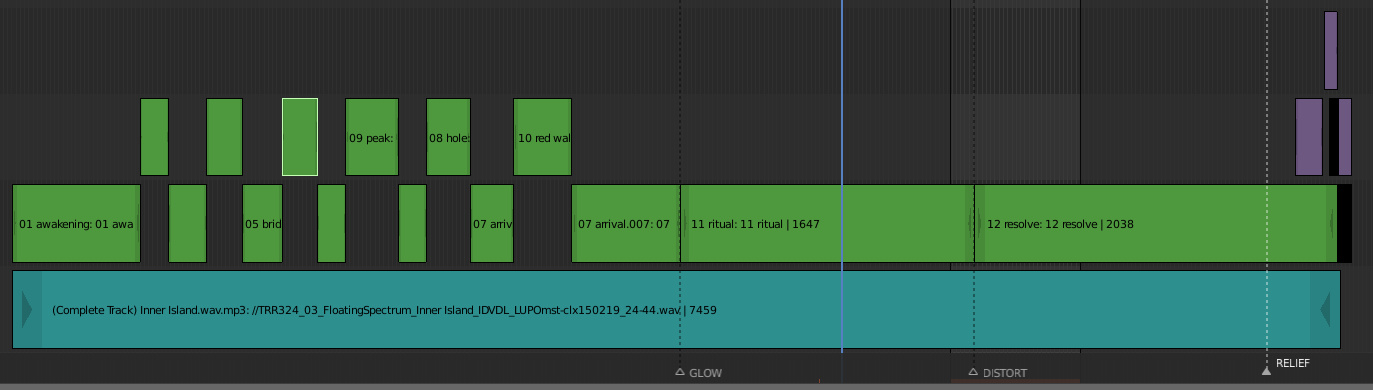

I have decided to organize the project in 12 scenes. I want certain actions to be synchronized to the music so each scene has precise start and end frames with the sound track aligned to frame 1. This allows me to preview any scene with synchronized sound.

The timeline showing different scenes in sync with the music, credits at the end

This works very well until the moment I realize that I need to change the order of a few clips I had already animated to make the story more coherent. Stones can not land before they take off! When shifting clips to earlier or later points in time they become completely out of sync because the sound track can not be moved. A small disaster in my attempt to finish in time.

The only solution I can think of to avoid this happening in future movies is to first produce a complete animated story board. Even if it’s hand drawn or made out of simple geometry shapes, it would already reveal mistakes with the order of events.

Fixing meshes

- Meshlab crashes.

- Fixing meshes.

Other issues I had

- Unstable physics at the end of the movie.

- Some material setting making everything very slow in Blender. Solution: a script.

- Slow animation playback in Eevee Rendered Viewport (due to normal maps)

- How to use the Simplify curves addon

- Fields do not affect rotation? (no response)

Shaders

- Using GLSL knowledge to create mesh transitions.

I wanted to somehow morph different backgrounds. The thing I came up with was to have one background visible and a second one slightly behind. Then I would dissolve the front background. For that I created a group called SphericalDissolve, a growing irregular hole with glowing edges.

A spherical dissolve effect used to transition between backgrounds

Scripting

I did some python scripting to edit the timeline and avoid repetitive work. One script was used to add time-jitter to key frames, so the motion would be more organic by avoiding all rocks moving perfectly in sync. Another script was used to transfer the physics state from the end of one scene to the beginning of the next scene.

- Scripts to automate things: meshroom, renaming textures and models, etc.

- Joining physics across scenes in Blender. A Python script was used to transfer the physics state from the end of one scene to the beginning of the next scene.

- Scripting in Blender to work faster. A Python script was used to add time-jitter to key frames, so the motion would be more organic by avoiding all rocks moving perfectly in sync.

I used scripting basically to work faster. Since there are 16 stones taking part on a choreography, and each one has animated properties in a timeline with hundreds of keyframes, I wanted to avoid repetitive work like randomizing animation curves, or activating and deactivating things manually.

But the most crucial part was something rather technical: I had the movie split in scenes, and I needed to transfer the physics engine state from the end of one scene to the beginning of the next one. So I wrote a script to do that.

And yes, the results can be amazing but it’s not very inviting towards experimentation with code. The documentation is not so easy to understand or even complete. Maybe I should play with it and then make some tutorials :slight_smile:

Blender community

- Rigid Body across scenes

- Shadow flicker with Eevee at image edges. Solved by rendering two scenes with Cycles.

Final thoughts

// Creating your own tools vs using existing ones // Using less randomness

Working with Blender for two months slightly changed my view about creative coding.

When I work with a creative coding framework for a while I end developing things like saving and loading configuration files, animation timelines, modifier tools, a user interface and more. I do enjoy writing code that produces audiovisual output, but implementing and re-implementing such support code does not make me happy.

People often spend years building such a customized framework-inside-the-framework. A positive aspect of doing this may be the creation of novel approaches perfectly tailored to the authors own needs, instead of using standarized tools.

But when I see the massive GUI in Blender and how efficient it is to use its thousands of options I look at my own attempts and think that I will never build such a complex environment myself, and I may just as well use Blender instead :)

It’s just an idea, not a decision. Of course you don’t need to build something so complex to create amazing work. But the truth is that I really enjoyed creating materials with nodes in the shader editor, replaying animations a hundred times while tweaking animation curves. It’s different. With Blender you are probably trying to do something polished with a fixed duration, instead of a living real time system (what you would create with Processing or similar). Combining both tools is another interesting option.

It was a real pleasure to be able to tweak things almost in real time and to use f-curves to adjust behaviors without relaying so much in randomness.

A choreography for 17 rocks and 3 cameras